THE AUTHOR:

Barnabe Cargill, Vice President of Marketing and Strategy at Jus Mundi

Every legal AI vendor claims their platform is accurate, reliable, and performs at a “senior associate level.” But what does that actually mean? And how do you know it’s true? Independent validation answers that question.

This is why Jus Mundi commissioned Vals AI, a Silicon Valley-based legal AI evaluator famous for the VLAIR report, to conduct an independent assessment of the performance improvements of Jus AI V1 vs. V2 against the kinds of work international arbitration practitioners actually do. No vendor oversight, no selective reporting. Just rigorous, transparent testing.

The results revealed exactly what we hoped an independent evaluation would: a clear picture of how Jus AI performs, measured against meaningful professional standards, validated by domain experts, and reported with full transparency.

Download the complete Vals AI evaluation report to review the assessment framework, examine performance across all quality dimensions, and explore detailed results by task category.

In this article, we’re sharing the results: what Vals AI tested, what they found, and what it means for practitioners considering AI tools for arbitration work. If you’re making decisions about legal AI tools, this is what independent, transparent evaluation looks like and what performance benchmarks reveal about real-world performance.

The Methodology

Vals AI is a Silicon Valley-based legal AI evaluator backed by Stanford research, specializing in rigorous assessment of legal AI systems. For their assessment of Jus AI V1 vs. V2, we gave them complete control. Our only requirement: make it rigorous, make it transparent, and report everything you find. Here’s what they did:

Test Design

Vals AI enlisted international arbitration experts to create 60 questions spanning the real work practitioners do, including:

- Finding key facts and procedural details in complex awards

- Extracting and comparing reasoning from multiple cases

- Drafting preliminary arguments and legal analyses

- Verifying citations for accuracy and faithfulness

Quality Dimensions

Each response was evaluated across five dimensions:

- Correctness: is the answer or conclusion right?

- Relevance: do the citations actually support the claims?

- Faithfulness: are statements backed by sources, or hallucinated?

- Global Relevance: does the response respect constraints and directly address the query?

- Language & Format: is it professionally written and structured?

Validation

Every output was scored independently by LLM-based judges then cross-checked with with international arbitration experts on a sample of questions. The results showed a 74% overall agreement rate between AI judges and human experts, confirming that the scoring reflected genuine professional judgment.

The Results

Strong Performance Across the Board

For Jus AI 2, the results were both quantitative and practical; clear, measurable gains in the areas that matter most to arbitration professionals. Across 60 expert-authored tasks and more than 1,000 evaluation points, Jus AI 2 consistently outperformed its predecessor, delivering more accurate reasoning, stronger citations, and outputs closer to professional legal writing.

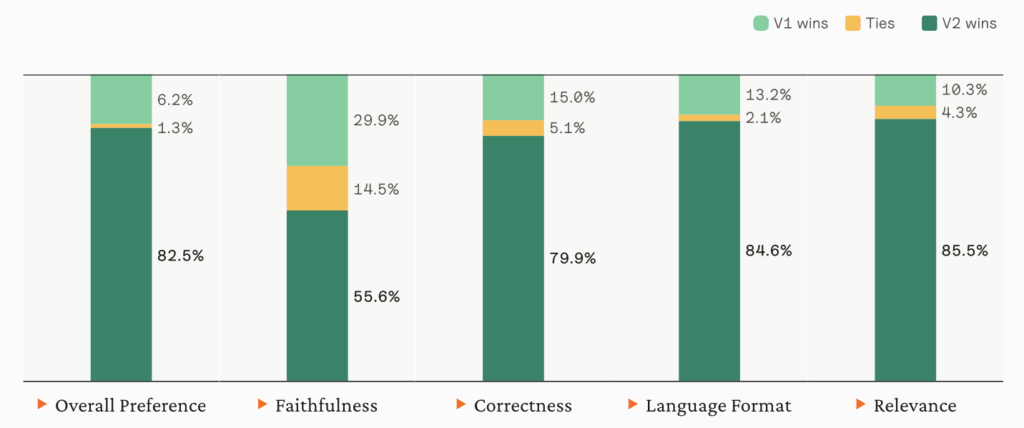

In side-by-side comparisons, Vals evaluators preferred Jus AI 2’s responses 82.5% of the time over the previous version.

This wasn’t preference in just one area, it was consistent across every quality dimension Vals AI measured; better answers and stronger sources. The kind of across-the-board improvement that changes how you can use a tool in practice. But overall preference only tells part of the story. What really matters is how Jus AI 2 performed against professional standards in arbitration work.

Where Quality Shows: The Five Dimensions

Vals AI uncovered strong foundational performance patterns across all five dimensions with a significant portion reaching excellence. Here’s how Jus AI 2 performed across each quality dimension:

Correctness: 73.1%

Responses reached the right legal conclusions and included the components you’d expect in high-quality, senior associate-level responses. Even more telling: 40% of questions achieved perfect scores, passing every single quality check Vals AI applied. That means 2 out of every 5 queries produced output that was completely correct, properly cited, and professionally formatted. Ready for you to use with minimal review.

Relevance: 65.9%

Citations properly supported the answers two-thirds of the time, with 65.9% achieving alignment, meaning sources were legally and contextually appropriate, current, and relevant, with pinpoint citations to the exact supporting material. These improvements translate into fewer errors, tighter sourcing, and less manual verification for lawyers reviewing results.

Faithfulness: 85%

This is the “hallucination test” and Vals AI found that 85% of responses had adequate source support overall. Nearly half (46.7%) achieved perfect alignment; every claim matched to source material, no fabrications, complete accuracy in representing what sources actually say. The risk of citing non-existent cases or misrepresenting holdings with Jus AI 2 is significantly mitigated when nearly half of responses show perfect faithfulness, and the vast majority have solid support.

Language & Format: 61.7%

61.7% of outputs met professional legal writing standards. This means clear structure, appropriate tone, and proper formatting. The kind of presentation you could include in client communications or tribunal submissions with minimal editing. Professional presentation directly affects how much time you spend revising AI outputs. When 93% come out clean, you’re editing less and using more.

High-Impact Workflows

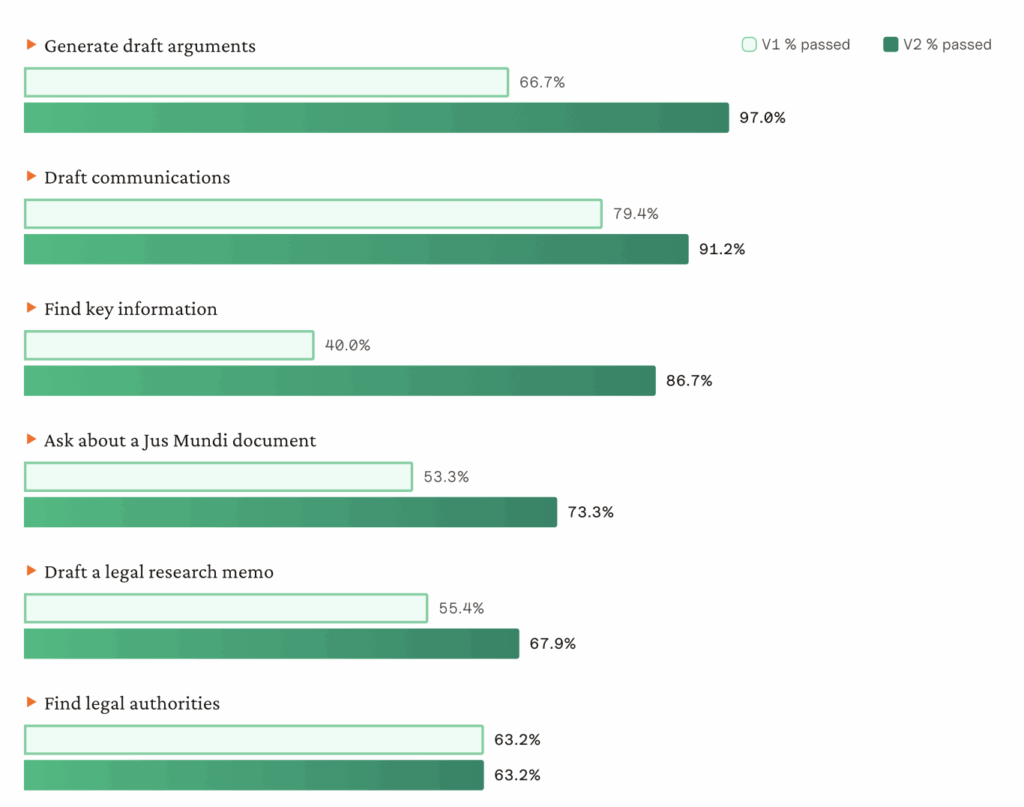

Vals AI tested different task categories to reveal where Jus AI 2 delivers most value.

Generating draft arguments: 97%

Nearly perfect performance on structuring legal reasoning with case support. The task combines retrieval (finding relevant authorities) with structured output (organizing an argument), exactly what modern AI handles well. When you need a first draft of an argument on established legal principles, the quality is consistently high.

Draft communications: 91.2%

Professional correspondence (letters, notices, emails) performed exceptionally well. The format is predictable, the tone requirements are clear, and the structure follows known patterns. AI excels here, producing client-ready drafts.

Finding key information: 86.7%

Document review and fact extraction showed strong performance. When you need to identify key dates, entities, corporate structures, or specific facts buried in case materials, Jus AI 2 handles it reliably. This is pure retrieval and pattern matching, an AI strength.

Drafting research memos: 67.9%

Comprehensive analysis requiring synthesis of multiple sources and legal reasoning. Performance was good, providing strong foundations that benefit from lawyer refinement.

Finding legal authorities: 63.2%

Reliable for identifying relevant tribunal decisions and cases. Useful for casting a wide net, valuable when combined with lawyer judgment about which sources are strongest. The numbers prove that Jus AI 2 isn’t just more advanced, it’s more dependable. Independent evaluation confirmed what practitioners value most: correctness you can rely on, citations you can verify, and outputs you can actually use.

What This Means for Practitioners: From Faster Answers to Stronger Arguments

Independent validation isn’t just about proving accuracy, it’s about showing how performance translates into better work. For arbitration professionals, the Vals AI results reveal tangible benefits at every stage of legal research and drafting.

Faster, More Confident Research

When you’re under pressure to deliver quick, reliable answers, every extra search, cross-check, or citation verification adds up. With Jus AI 2’s +47-point improvement in identifying key information, practitioners can now surface relevant facts, procedural details, and authorities faster and with more confidence in their completeness.

Stronger First Drafts, Less Rework

With a +30-point gain in drafting quality and reasoning, Jus AI 2 now delivers more coherent, better-structured drafts that reflect the analytical depth practitioners expect. For lawyers, this means starting from a stronger baseline; a well-reasoned draft that needs refinement, not reconstruction.

Greater Trust in Citations and Sources

An answer is only as strong as the sources behind it. Jus AI 2’s +20% improvement in citation relevance (and far fewer unsupported references) means lawyers can now trust that cited sources actually back the conclusions drawn. Users can also always check any and all sources used by Jus AI.

Usable Outputs That Fit Legal Workflows

With a 60% improvement in language and formatting, Jus AI 2 produces outputs that read like legal documents: clear structure, professional tone, and logical flow. For practitioners, this reduces cognitive friction; no more reformatting messy text or rewriting to fit legal style.

Reliable Performance Across Tasks

Doubling the number of perfect scores results in consistency. Lawyers can expect dependable quality across tasks; whether it’s procedural summaries, argument outlines, or citation validation. That consistency allows teams to start building internal workflows and templates around Jus AI, knowing that quality won’t fluctuate dramatically from one task to the next. For firms and legal teams, that’s not just efficiency, it’s operational trust.

Confidence

Independent validation signals that Jus AI isn’t an early experiment, but a proven tool that meets the standards of legal reasoning, sourcing, and professionalism that arbitration demands. For lawyers, that means confidence to use it where it matters; in research, client-facing work, drafting, and in decision-making support.

AI as Amplifier, Not Replacement

AI is getting better, but it’s not replacing senior associates, it’s making them more effective. While Jus AI helps lawyers build stronger arguments faster and ultimately win cases, human review remains essential because legal work requires strategic judgment, client relationship management, and contextual understanding no AI provides.

The Practical Takeaway

If you’re evaluating AI tools for your arbitration practice, here’s what matters:

- Look for independent validation. Not cherry-picked examples. Third-party evaluation with transparent methodology and domain expert involvement.

- Understand performance by task type. Overall scores hide critical details. You need to know where AI excels and where it struggles so you can design workflows that leverage its strengths and mitigate its weaknesses.

- Pilot test with your own work. Benchmarks provide evidence, but nothing replaces testing AI on the actual work you do. Try it on real research questions, real drafting tasks, real document review. See if benchmark performance translates to your practice.

- Design appropriate quality control. Even when AI performs well, professional responsibility requires review. The question isn’t whether to review AI outputs, it’s how much review different tasks require, and what that review should focus on.

- Deploy thoughtfully, not blindly. AI is a powerful tool when used strategically. The practitioners who get the most value will be those who understand both capabilities and limitations and design workflows accordingly.

Raising the Standard for Trust in Legal AI

Independent validation is more than a milestone, it’s a mandate for how legal AI should be built, tested, and trusted. The Vals AI evaluation didn’t just confirm Jus AI’s accuracy; it proved that rigorous, transparent testing can turn claims into evidence and innovation into reliability. As AI becomes a fixture in legal work, this kind of accountability will define the difference between tools that impress and tools that endure.

Ready to see the full methodology and detailed findings?

Download the complete Vals AI evaluation report to review the assessment framework, examine performance across all quality dimensions, and explore detailed results by task category.

About Jus Mundi

Founded in 2019 and recognized as a mission-led company, Jus Mundi is a pioneer in the legal technology industry dedicated to powering global justice through artificial intelligence. Headquartered in Paris, with additional offices in New York, London, and Singapore. Jus Mundi serves over 150,000 users from law firms, multinational corporations, governmental bodies, and academic institutions in more than 90 countries. Through its proprietary AI technology, Jus Mundi provides global legal intelligence, data-driven arbitration professional selection, and business development services.

Press Contact Helene Maïo, Senior Digital Marketing Manager, Jus Mundi – [email protected]

*The views and opinions expressed by authors are theirs and do not necessarily reflect those of their organizations, employers, or Daily Jus, Jus Mundi, or Jus Connect.